So the Nepomuk project is over (as in Nepomuk – the big research project, not Nepomuk in KDE) but thanks to Mandriva and the new french research project Scribo I will continue working on the semantic desktop in KDE. Scribo is all about brining natural language processing (NLP) to the desktop. And Mandriva will again (as done with Nepomuk) bring it to the KDE desktop. This is exiting, especially since it integrates very well with the ideas of the semantic desktop: we can analyze emails, text documents or web pages and extract machine readable information from it which we can then use within Nepomuk.

As a first glance at what can be done I created a little plugin system (not unlike my annotation system for Nepomuk which is still in playground, trying to mature) for text analysis.

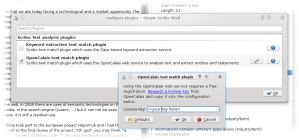

So far I implemented two plugins which analyze a text and propose certain entities or statements. The first one uses the keyword extraction developed by DERI Galway. This provides a list of keywords with corresponding relevance values which could then be used as tags or be mapped to resources in the Nepomuk store (imagine projects or persons or whatever). The second one uses the OpenCalais web service by Reuters which uses a huge database and some fancy algorithms to extract entities and statements from the text. An example can be seen in the following screenshot – “Linux” has been detected as a Technology and can be found twice in the text as such. Like the keyword extraction OpenCalais also provides a relevance. But in addition we get the position(s) in the text and the type of entity (based on an ontology created by Reuters).

I think this is already quite nice. Now it is time to use this stuff in something more than a test app, to propose annotations for files and emails for example (BTW: I already implemented a plugin for my annotation framework based on the Scribo framework – ah, isn’t it nice if it all fits together)

Anyway, this is what Scribo will bring us: NLP. Enjoy, discuss, flame, praise. :)

Rock on! After seeing more and more machine-learned intelligence lately, and how useful it is getting (Wolfram Alpha for a good example), I’ve started getting a bit hyped :-)

Very interesting stuff!

Just how advanced is Scribo?

This is great news! I was thinking about something like this a while ago when reading a post you wrote about Nepomuk, but I thought it would be out of the reach of the project and too early given the limited scope of Nepomuk integration in KDE so far.

It seems I found something to look forward to 4.4 before 4.3. is even out.

This is very interesting. I studied computer linguistics as minor subject, so I know this is a difficult task. I’m not sure a remote web service is the right thing as a backend, but it’s interesting nonetheless. The parsing and word analysis could even be used for machine translation.

@mutlu: I think trueg was more concerned with the fundamental framework and services. The integrative work like GUI design and interfacing with existing applications should probably be done by someone else.

Well, so far I try to use any system I can get my hands on as a plugin to tune the API. I will not invent new algorithms for NLP myself. I am “only” integrating. A web service is a very convenient way for a first test.

You probably know about this but dbpdia has a nice web service and you can even download their whole RDF dump if you feel like it :)

While I really like NLP, I’m not sure this can have a concrete use in a desktop. Except for a grammar checker of course… but it’s waaaaay to hard to do (in french, at least)

Anyway, good luck ! I was also sceptical when you first talked about neponuk… but you finally showed us that it can be useful !

very exciting to read :-) about !

can you give any tips how to use kde’s playground repository and compile those new components ?

:-)

Submitting documents online for processing raises a fairly big privacy concern, if I’m not mistaken. Hopefully this can be accomplished without Reuters being able to read all of my email.

That said, I’m excited about the technology :)

Of course you are right. Like I said: this is only the beginning and using a web service for this kind of analysis cannot be the final answer. But it gives a nice room for experimentation.

even better if you pin down entities to a specific reference by means of the identifiers at

http://fp7.okkam.org

reuse those that already exist or create (and, crucially, publish) your own if needed.

Claudia Niederee (formerly of the Nepomuk consortium and now working on OKKAM) should be able to show you how to take advantage of OKKAM.

Pingback: Cotygodniowy biuletyn KDE nr 4 - Silezja.eu

Bravo! :D

I certinatly hope this system (hopefully local, but I wouldnt mind an online one, personally) finds it’s way onto my desktop VERY soon!

The usefulness of it cannot be underestimated…